How to Actually Use ChatGPT

“It is easy for those in business who are absorbed by cost reduction to forget that automatic production, if it means fewer and fewer jobs and a disregard of human costs and hardships; will in the end be damaging to the foundations of our free society.”

You might think I’m talking about LLMs here. But actually, I’m not. This comes from a US Governmental report dated 5th January… 1956. Yep, not about AI… it’s talking about computers.

It Continues….

“The genius and industry which create and boast of "thinking machines" cannot and ought not to be allowed to shift all or portions of the problems created by them to the shoulders of Government and labor.”

“While most industrialists, […], have demonstrated understanding of the social responsibility of free business, the subcommittee has, unfortunately, found evidence that some of those busy in advancing the technical side of labor saving machines are still apparently unaware of the overall significance which their activities have to the economy.”

“Government, of necessity and by public demand, is concerned with levels of unemployment, with the impact of technological changes upon our business structure. and with the maintenance of mass purchasing power.”

Wow, this sounds eerily familiar. The exact same anxieties we have about LLMs today… we’ve had them before. Almost word for word.

And just like how computers became ubiquitous, we’re moving into a world where LLMs are a normal fact of life. So if these tools really are the next computers… we should probably learn how to use them properly.

This video is your crash course, from one data scientist to another. Welcome to LLMs 101. Please throw your text book out of the window.

An early computer from the 1950s, when automation fears first emerged.

Remember the early days of ChatGPT when everyone thought prompt engineering was going to be the next big job instead of becoming part of our day to day just like how we can competently google things on our own, thank you very much.

Whether you’re LLM-skeptic or an AI-enthusiast, one fact is undeniable: LLMs have grown deep roots within our society in just a quick few years. My personal philosophy is that you can’t make an informed opinion on it without understanding it and so today I’m going to do my best to give you my understanding of how to work with LLMs effectively.

Now I first have to caveat that I’m not trying to paint myself as a LLM expert, nor am I going to give you the deep dive into how these large language models work under the surface. Rather I’m going to give you an informed opinion of someone who has taken a personal interest in understanding how to use them as an effective tool so that you’re not starting from scratch.

Chatgpt home screen, asking the question how to use LLMs

This Blog is a 101,

An introductory model that you can use to build on with more advanced techniques. And importantly, it’s one that you can send to your Mum who is considering spending £1000 on an introductory LLM course because she’s worried about being left behind. Contrary to most of our content, there will be no data science today. This blog is about public service.

So let’s kick it off by starting with one question: What does it mean when it says ChatGPT is thinking?

When the laptop stares back…. Chatgpt in thinking mode

Part 1: ChatGPT is not a human

So the first thing that I need to make very clear is that a LLM, whether you’re using ChatGPT, Gemini or Grok, is not a human and you might think this is a bit obvious but I think it needs reinforcing because sometimes even though our rational brain knows it’s not real, when it responds in a way that validates how we’re feeling or jumps in with enthusiasm in response to our monologue about our new favourite TV show it can be easy for something deep within us to forget that the thing we’re talking to isn’t real.

And so we need to keep in mind two very important things: Despite the language they use, LLMs do not think, feel, enjoy, love or have opinions. And much like the YouTube algorithm is designed to feed us video after video that we’ll watch so they can monetise our attention, the LLMs are tuned to give us whatever response keeps us talking.

That last point is really key as recent studies have found alarming instances of mental health concerns and one key issue is that these LLMs are often designed to appease you so that you keep talking, meaning they are unlikely to question perceptions you might bring to them which could lead to a further deterioration of your mental health symptoms. So yes, you might use ChatGPT for a sense check, but it is biased to validate your point of view.

The way that I try to combat this if I genuinely need a sense check on something is to pretend to hold the opposite point of view. That way I can get validation of both sides of the argument, allowing me to critically assess both perspectives.

Ai and Human / split perspectives

You’re Not Alone: Looking after your mental wellbeing

I do have to take a pause here to say that if you are struggling with mental health issues, your safest bet is to access real resources that have been designed to help you. relevant resources are available, Please seek out help. You matter.

With that in mind, I want to talk about how LLMs actually work. Because boiled down to their simplest explanations, these AIs are like very advanced autocomplete. Their training is like a very advanced game of word association. They can give advice on how to create a YouTube thumbnail because they’ve scraped hundreds of thousands of reddit comments and blog posts about how to create a YouTube thumbnail and are parroting back the words that come up most in that context.

Which means it has the side effect that the advice it gives is popular. Which is not the same as being good.

Which actually brings me onto my next point in the conversation

Mental health matters, please speak to someone if your struggling

Part 2: LLMs don’t make you better at something. They make you more average.

I’m going to use a data-sciency term here to talk about this and that is “reversion to the mean”. If something reverts to the mean, that means that either over time or over a certain number of experiments, we’re very likely to move towards the average.

Now I learned about this topic through financial modelling which is a bit too niche for this topic so I did a quick google and actually learned what the term “sophomore slump” means and it fits this topic perfectly.

The Sophomore slump is a term to describe when a player who had a phenomenal first year in baseball has a comparative underperformance in their second year of play. This is reversion to the mean in action. Yes player skill does have a huge impact on performance, but there is undeniably luck involved when we’re talking about sport at the highest level. If a player performs surprisingly well, so well above their previously observed skill level, unless something dramatically shifted in the way they play, then luck was significantly involved in their high performance.

Now some of us are luckier than others, that’s for sure, but often we go through lucky and unlucky periods. When our luck runs out, it usually returns us to the normal luck, in this player’s case back to their baseline skill. This looks like a massive underperformance compared to last season, but actually it’s just going back to their normal, or reverting back to their mean. It just looks like a Sophomore Slump because we compare it to the phenomenal performance of the previous year.

Average….. just like everybody else

So how does this link back to LLMs?

Well as I hinted earlier, LLMs are trained on huge datasets made up of millions of opinions on all sorts of topics and LLMs are advanced autocomplete so their job is to give the most common response. And the most common response isn’t the best, it’s the most average.

So if you don’t know how to program at all? It’s going to help you get started for sure. It’s going to give you the basics, help you get your simple code running and all that good stuff. But if you’re someone who has been programming professionally for many years? Chances are a bit of vibe coding is going to lead to a lot of frustration and it would have been quicker and better quality to just write it yourself.

And by the way, just because the LLM helps you do the thing better, doesn’t mean you get better at the thing. I’ve done a whole video about how ChatGPT makes learning worse, including a study where they found it was particularly bad for programming, click here to read the blog

But this means that whenever you use LLMs for something, it’s going to get you to the average. And sometimes that’s desirable. For example, when I first learned to write hooks for my short form content I was terrible and everything that ChatGPT came up with was better than me. Now, I find myself knitting my eyebrows together at its suggestions and coming up with one on my own. It was a useful tool for me when I had no experience in this space. Now it’s much better for me to do it myself.

So maybe let’s take this opportunity to talk about what ChatGPT is actually good at.

Part 3: How ChatGPT is actually helpful

So the best reframe I have for you for how to use ChatGPT is to think of it as a collaborator, not an oracle. If you need to know a fact, google is still your ultimate source for it because the information google provides is rated by authority and credibility. Or it used to be. Now it’s ranked by SEO but if you can wade through the keyword stuffing, you can usually find an answer that is accurate when it comes to factual information.

LLMs on the other hand are word prediction algorithms, and so sometimes they can get words wrong. If it gives you a fact, ask for a source. It’ll tell you if it doesn’t have one and that likely means it’s made up.

So LLMs can’t give you an answer, but they can help you find it yourself. Sometimes I ask LLMs for ideas for video titles and if I’m honest, most of the answers it comes back with are absolutely awful. However, usually there’s a glimmer of something in the answer that will trigger my brain down a path that sends me to an actual good idea. They bypass blank page syndrome and get the cogs whirring quickly.

Similarly, When I’ve been stumped on a data science problem, I give a LLM the high level description and it often suggests a library I haven’t even heard of that can usually get me moving forward again. I tell it a kind “no thank you” when it offers to write me some code.

It’s also very good at rephrasing or summarising text, pulling out ideas and concepts from input you give it. Give it a huge article and ask it to summarise the key points and it’s likely going to get it mostly right, although it’s always good to ask “where did you get that point from in the article?” for a quick sense check.

However, all of this comes with the caveat that the utility you can get out of it all depends on how you prompt it.

Do LLMs help get those thinking cogs turning?

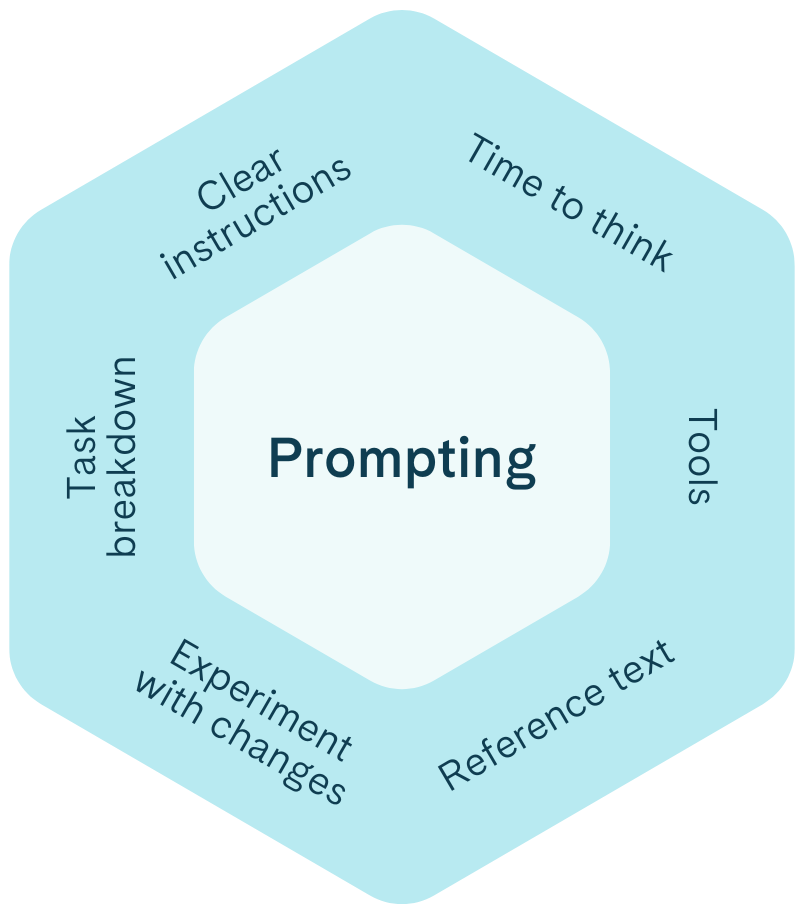

Part 4: How to prompt a LLM

So before we get into what sort of things we can type into the chat bar about prompting a LLM, I wanted to highlight something that I’ve noticed when I’m using it: the longer a LLM conversation goes on, the worse the LLM gets.

What do I mean by this? Well you might have heard of the term “hallucination” which is where an AI makes something up or starts saying things that are weird or completely untrue. I’ve definitely had conversations with it about a TV show where it starts naming a character that doesn’t exist or completely invents a new plot point. These happen because of the advanced autocomplete I mentioned earlier. LLMs don’t actually know anything, they just guess the most likely words. In doing this, sometimes wires get crossed and it gets things wrong.

My personal theory is that the longer the conversation goes on, the more noise gets introduced into the conversation. When you first start talking, it’s trying to predict things just based on one message. The more messages you exchange, the more data it’s trying to use to predict things and the more this can lead to errors. So my number one rule for prompting is: If the chat gets weird, it’s time to start again. You can copy and paste over any important context from a previous chat if you’d like.

Giving your LLMs clear instructions

Now let’s talk about actual prompting.

In the early days people would suggest you write things like: “Act like a head of marketing, give me x,y,z”. I’ve also seen many comments of people suggesting writing to it “be less sycophantic”. Personally I’ve seen it ignore these kind of prompts so many times that I have kind of given up on the effectiveness of its approach.

Personally, I prefer to be direct but give it structure. For example, rewrite this email in my tone of voice to match the structure: “Introduction with general pleasantries. Three key points in bullet points. Tell them I look forward to hearing back from them, sign off. Please avoid using em-dashes” and then I copy and paste the symbol instead of writing “em-dashes” because it will STILL USE THEM if you tell it not to with words.

And then one of my favourite things to do is get the LLMs to critique each other’s work. We know that diversity is important. We know that diversification of investments protects you from things going wrong. We know two heads are better than one for solving a problem. So for me, what’s better than trusting an answer from one LLM? Getting them to critique each others work to narrow in on the best response.

And lastly keep in mind GIGO: Garbage In, Garbage Out. If you put in low quality prompts or overly complex prompts that would be difficult for anyone to understand, you’re going to get garbage out. Prompt quality matters.

Oh wait, there’s just one more important thing I need to talk to you about.

Part 5: Privacy

One overarching point that is a concern by many of the naysayers when it comes to LLMs (and in fact was in 1956 for computers too) is that it would invade our privacy. And that is a valid concern. Enough of us have experienced hacks, fraudulent charges on our cards and all sorts of privacy invasions from our behaviour online. But with LLMs of course there are some more nuanced privacy concerns.

First and foremost, everything you say to the model is fair game in training and improving the model. That means you shouldn’t give it personal details you don’t want others to know, or certainly not in a way that links them back to you.

Company secrets or proprietary code is also another incredibly important one. I sometimes use LLMs to get a pacing check on my scripts because we put these blogs and videos online for free that the LLMs are going to scrape anyway, but anything you don’t want to be out there publicly, don’t feed to a LLM.

Be cautious what data you feed to your LLM

So there you go,

an introductory video on LLMs, what they are and how to use them in a way that acknowledges both the pros and cons from a LLM-skeptic user. There are many further topics I could cover here, such as environmental and ethical concerns, practical tips for how to integrate it into your daily workflow or a conversation on whether you should personalise it to yourself. But this is LLMs 101, and it’s okay if we’re just getting started.

I’m also aware that LLMs are a hugely controversial topic so we welcome discussion over on our socials, Just keep it civil, otherwise I’ll copy and paste them all into a LLM to help me moderate.

Finally, if you’re a data scientist reading this because you’re bored waiting for your code to run and you’d like something that actually improves your data science life, we have the tooling for you. Our platform slices data science wait time so you can spend less time waiting and more time doing data science. Our closed beta launches next month so sign up via this Link so you can be the first to try it out.

if this Blog helped you out, consider helping us out by liking and subscribing our youtube channel and otherwise, we’ll see you in the next one.

For more of this, come on the journey with us and keep being Evil